Manipulate big data with Arrow & DuckDB

Struggling with memory for your large data sets?

We work more and more with large datasets, requiring interoperability between platforms.

We must then manage a puzzle, between the storage format, exchange, and the analysis of data that does not fit in memory.

For exemple:

- How to analyze 100 Gb of data on a PC which has only 16 Gb of RAM?

- Which format to use for data that can be analyzed by various teams using R, Python or Julia?

- Or applications developed in C++, Java or Rust?

- Apart from the memory, how to reduce the used space on the disk?

- Why load all the data if I want to analyze only one sub-category?

We generally use the .csv format which is easy to read, even by a human, and can be used in any language and on any system. However, this format is not compressed, and therefore takes up disk space. It will need to be fully loaded into memory even if you want to perform an analysis on a subset of the data. This has a cost both in storage and analysis time.

Apache Arrow and DuckDB can solve these problem. They are able to interact with each other, and have libraries for most languages. These are two tools that will become essential to know for the collection, storage and data analysis.

A brief introduction

The objective of this post is to familiarise you with different uses of Arrow and DuckDB by manipulating them, on simple examples that can be useful in a data processing process. But before looking at examples, what are Apache Arrow and DuckDB?

Apache Arrow is a platform that defines an in-memory, multi-language, and columnar data format. It allows copyless data transfer by removing the need for serialization. Arrow is often associated with the Parquet format which is used to store tabular data.

DuckDB is a database management system, columnar, requiring no installation (like SQLite). It is an OLAP (Online Analytical Processing) system, unlike SQLite which is an OLTP (Online Transactional Processing). DuckDB has its storage format (.duckdb), but its engine can also be used directly on Arrow objects or Parquet files.

They therefore both make it possible to process data in columns, as opposed to the .csv format or the OLTP databases which process data online, and are multi-platform, multi-language. They also make it possible to return the result of queries without having to load all the data into memory.

First, I will show different use cases, how to read, write, query with Arrow and DuckDB on a simple dataset (parlmerpenguins). This dataset is small, so it fits in memory, but the goal here is to familiarize yourself with Arrow and DuckDB, on data accessible to everyone, without worrying about downloading large amounts of data.

Secondly, I would use a larger dataset (59 million observations) to benchmark the different formats (.csv, .parquet, .duckdb) and query times depending on the engines and formats.

Before we start, let’s load the necessary packages for this post:

library(arrow)

library(lobstr)

library(tictoc)

library(fs)

library(palmerpenguins)

library(duckdb)

library(dplyr)

Using Arrow

First contact

By loading the palmerpenguins package, the penguins dataframe is available. It is composed of 8 columns and 344 observations.

glimpse(penguins)Rows: 344

Columns: 8

$ species <fct> Adelie, Adelie, Adelie, Adelie, Adelie, Adelie, Adelie, Adelie, Adelie, Adelie, Adelie, Adelie, Adelie, Adelie,…

$ island <fct> Torgersen, Torgersen, Torgersen, Torgersen, Torgersen, Torgersen, Torgersen, Torgersen, Torgersen, Torgersen, T…

$ bill_length_mm <dbl> 39.1, 39.5, 40.3, NA, 36.7, 39.3, 38.9, 39.2, 34.1, 42.0, 37.8, 37.8, 41.1, 38.6, 34.6, 36.6, 38.7, 42.5, 34.4,…

$ bill_depth_mm <dbl> 18.7, 17.4, 18.0, NA, 19.3, 20.6, 17.8, 19.6, 18.1, 20.2, 17.1, 17.3, 17.6, 21.2, 21.1, 17.8, 19.0, 20.7, 18.4,…

$ flipper_length_mm <int> 181, 186, 195, NA, 193, 190, 181, 195, 193, 190, 186, 180, 182, 191, 198, 185, 195, 197, 184, 194, 174, 180, 18…

$ body_mass_g <int> 3750, 3800, 3250, NA, 3450, 3650, 3625, 4675, 3475, 4250, 3300, 3700, 3200, 3800, 4400, 3700, 3450, 4500, 3325,…

$ sex <fct> male, female, female, NA, female, male, female, male, NA, NA, NA, NA, female, male, male, female, female, male,…

$ year <int> 2007, 2007, 2007, 2007, 2007, 2007, 2007, 2007, 2007, 2007, 2007, 2007, 2007, 2007, 2007, 2007, 2007, 2007, 200…We can make a query using dplyr, and we will immediately get the result:

penguins |>

group_by(species, sex) |>

summarise(avg_body_mass = mean(body_mass_g, na.rm = TRUE)) |>

ungroup()# A tibble: 8 × 3

species sex avg_body_mass

<fct> <fct> <dbl>

1 Adelie female 3369.

2 Adelie male 4043.

3 Adelie NA 3540

4 Chinstrap female 3527.

5 Chinstrap male 3939.

6 Gentoo female 4680.

7 Gentoo male 5485.

8 Gentoo NA 4588.How to do the same query using Arrow?

Before seeing how to use Arrow with large data stored on disk, we will reproduce the previous query with Arrow. To do this, we create an arrow object from this dataframe with the function arrow::arrow_table() :

penguins_arrow <- arrow_table(penguins)

penguins_arrowTable

344 rows x 8 columns

$species <dictionary<values=string, indices=int8>>

$island <dictionary<values=string, indices=int8>>

$bill_length_mm <double>

$bill_depth_mm <double>

$flipper_length_mm <int32>

$body_mass_g <int32>

$sex <dictionary<values=string, indices=int8>>

$year <int32>The created object contains the table definition, but does not contain any data at this point.

As for a dataframe, we can use dplyr with an Arrow object. So we repeat the previous query:

penguins_arrow |>

group_by(species, sex) |>

summarise(avg_body_mass = mean(body_mass_g, na.rm = TRUE)) |>

ungroup()Table (query)

species: dictionary<values=string, indices=int8>

sex: dictionary<values=string, indices=int8>

avg_body_mass: double

* Grouped by species

See $.data for the source Arrow objectAgain, no data is returned. We have just defined the query to be evaluated, but we must explicitly request the execution of the query. There are two functions for this compute() and collect().

compute(): runs the query and the data is stored in the Arrow object.collect(): runs the query and returns the data in R as a tibble.

penguins_arrow |>

group_by(species, sex) |>

summarise(avg_body_mass = mean(body_mass_g, na.rm = TRUE)) |>

collect()# A tibble: 8 × 3

# Groups: species [3]

species sex avg_body_mass

<fct> <fct> <dbl>

1 Adelie male 4043.

2 Adelie female 3369.

3 Adelie NA 3540

4 Gentoo female 4680.

5 Gentoo male 5485.

6 Chinstrap female 3527.

7 Chinstrap male 3939.

8 Gentoo NA 4588.We were able to use the usual syntax in R with dplyr to query an Arrow object like we do for a dataframe in R.

Parquet file

We saw a simple example to create an Arrow object from a tibble, and a query using dplyr to get familiar with the syntax. However, the tibble was loaded in memory, what interests us is to query without having to load the data in memory but from data on disk. We will therefore focus on the parquet file format.

Parquet is a format for storing columnar data, with data compression and encoding, and has improved performance for accessing data.

You can save a dataframe or an Arrow table in parquet format with write_parquet()

write_parquet(penguins_arrow, here::here("data", "penguins.parquet"))The write_dataset() function provides a more efficient storage format and in particular it can partition data based on a variable, using Hive style.

For example, the dataframe contains 3 species of penguins. You can decide to partition into as many files as there are species. Thus, 3 files will be created with a tree structure per species, and the files will not need to contain the species variable with the value that is repeated for each observation, which saves space. It’s also faster when analyzing a subset for a single species, since Arrow will only access a single file corresponding to this subset.

write_dataset(penguins,

here::here("data", "penguins_species"),

format = "parquet",

partitioning = c("species"))The structure on the disk is as follows:

dir_tree(here::here("data", "penguins_species"))D:/env/data-pipeline/data/penguins_species

├── species=Adelie

│ └── part-0.parquet

├── species=Chinstrap

│ └── part-0.parquet

└── species=Gentoo

└── part-0.parquetNow that we have the data in parquet format, we will redo the previous workflow, pointing to the file on disk instead and without loading the data into memory in R.

We open an arrow dataset object that points to the multi-file dataset we just created.

penguins_arrow <- open_dataset(here::here("data", "penguins_species"))

penguins_arrowFileSystemDataset with 3 Parquet files

island: dictionary<values=string, indices=int32>

bill_length_mm: double

bill_depth_mm: double

flipper_length_mm: int32

body_mass_g: int32

sex: dictionary<values=string, indices=int32>

year: int32

species: stringAs expected, penguins_arrow does not contain any data, but is an object that will allow us to make a request.

penguins_arrow |>

group_by(species, sex) |>

summarise(avg_body_mass = mean(body_mass_g, na.rm = TRUE)) |>

collect()# A tibble: 8 × 3

# Groups: species [3]

species sex avg_body_mass

<chr> <fct> <dbl>

1 Adelie male 4043.

2 Adelie female 3369.

3 Adelie NA 3540

4 Chinstrap female 3527.

5 Chinstrap male 3939.

6 Gentoo female 4680.

7 Gentoo male 5485.

8 Gentoo NA 4588.We obtain a tibble containing only the result, the data contained in the parquet files have not been loaded into memory in R. Of course this is not perceptible on such a small dataframe, but we will see later the impact on larger data.

Using DuckDB

With Arrow & dplyr

You can use the DuckDB engine to query an Arrow object with dplyr. Nothing could be simpler, just use the to_duckdb() function:

penguins_arrow |>

to_duckdb() |>

group_by(species, sex) |>

summarise(avg_body_mass = mean(body_mass_g, na.rm = TRUE))# Source: lazy query [?? x 3]

# Database: DuckDB 0.5.1 [christophe@Windows 10 x64:R 4.2.0/:memory:]

# Groups: species

species sex avg_body_mass

<chr> <chr> <dbl>

1 Adelie male 4043.

2 Adelie female 3369.

3 Adelie NA 3540

4 Chinstrap female 3527.

5 Chinstrap male 3939.

6 Gentoo female 4680.

7 Gentoo male 5485.

8 Gentoo NA 4588.We see that the result is returned by mentioning “# Database: DuckDB 0.5.1”, it is indeed the DuckDB engine that was used.

One of the advantages of using the DuckDB engine and dplyr may be to use a feature implemented by DuckDB but not yet by Arrow. We can do the opposite, and return to the Arrow engine with to_arrow().

The interoperability between Arrow, DuckDB and dplyr is very easy to use and brings a lot of flexibility for data processing.

With Arrow & SQL

With DuckDB, you can use SQL directly on an Arrow object to perform the query.

We create the connection with the database, then we save the arrow object as a DuckDB virtual table, giving it a name that will be used in the SQL query, finally we execute the query.

con <- dbConnect(duckdb::duckdb())

arrow::to_duckdb(penguins_arrow, table_name = "penguins", con = con)

dbGetQuery(con, "SELECT species, sex, AVG(body_mass_g) FROM penguins GROUP BY species, sex") species sex avg(body_mass_g)

1 Adelie male 4043.493

2 Adelie female 3368.836

3 Adelie <NA> 3540.000

4 Gentoo female 4679.741

5 Gentoo male 5484.836

6 Gentoo <NA> 4587.500

7 Chinstrap female 3527.206

8 Chinstrap male 3938.971Once all the manipulations are done, do not forget to close the connection:

duckdb_unregister(con, "penguins")

dbDisconnect(con)

With Parquet & SQL

It is also possible to access a Parquet file directly in the SQL query, without going through the creation of an Arrow object, by using read_parquet():

con <- dbConnect(duckdb::duckdb())

dbGetQuery(con, "SELECT species, sex, AVG(body_mass_g) FROM read_parquet('penguins.parquet') GROUP BY species, sex")

dbDisconnect(con) species sex avg(body_mass_g)

1 Adelie male 4043.493

2 Adelie female 3368.836

3 Adelie <NA> 3540.000

4 Gentoo female 4679.741

5 Gentoo male 5484.836

6 Gentoo <NA> 4587.500

7 Chinstrap female 3527.206

8 Chinstrap male 3938.971read_parquet() can read a Parquet file or a list of files, however, DuckDB cannot yet open multi-level files created by partitioning. To do this, you must therefore go through Arrow and open the dataset, as seen previously.

Similarly, DuckDB can read CSV files directly, or even create a table from a CSV file, which is great for importing data into a DuckDB table.

DuckDB is a database and therefore also has its own storage format.

.duckdb format

DuckDB’s storage goes beyond the Parquet format. Indeed, it allows to store a database with multiple tables, views, supports ACID operations, alteration of tables, additions of columns without having to completely rewrite the file.

To create the database from R, we use the duckdb() function, we can create the database directly by creating the connection with dbConnect() as below.

con <- dbConnect(duckdb::duckdb(), dbdir="penguins.duckdb", read_only=FALSE)We can then create the penguins table with the data from a dataframe with the following syntax:

duckdb::dbWriteTable(con, "penguins", penguins)You can also create the table with an SQL query by importing the data directly from a file, for example Parquet or csv:

dbSendQuery(con, "CREATE TABLE penguins_parquet AS SELECT * FROM read_parquet('penguins.parquet');")Or from an Arrow object, by registering the Arrow object as a virtual table, and creating the DuckDb table from this table:

arrow::to_duckdb(penguins_arrow, table_name = "penguins_arrow", con = con)

dbSendQuery(con, "CREATE TABLE penguins_arrow AS SELECT * FROM penguins_arrow")You can list the tables contained in the database with the following command:

dbListTables(con)[1] "penguins" "penguins_arrow" "penguins_parquet"The possibilities are very interesting when using very large amounts of data that do not fit in memory. It is thus possible to feed the database from large parquet files via Arrow, without having to load the data into memory in R.

The advantage of using a DuckDB database, in addition to the aforementioned reasons, is that the queries are much faster than from a Parquet file. Of course this has a cost in terms of storage. Indeed, the Parquet format is more compressed, and takes up less disk space compared to a DuckDB database. There is therefore a choice to be made, if we favor speed, compression, and if we need the functionality of a database.

Comparison of formats and engines

Data preparation

We focused on how Arrow and DuckDB work with R on a simple dataset. Now, let’s look at performance on two criteria: space on disk and speed.

For this I will use a larger dataset, prepared from files present on Kaggle.

If you want to reproduce, the files can be downloaded from Kaggle, and here is the code to generate the dataframe.

sales <- read_csv(here::here("data", "M5", "sales_train_evaluation.csv"))

calendar <- read_csv(here::here("data", "M5", "calendar.csv"))

sell_prices <- read_csv(here::here("data", "M5", "sell_prices.csv"))

sales <- sales |>

pivot_longer(cols=contains("d_"), names_to = "d", values_to = "qty")

sales <- sales |>

left_join(calendar, by = "d") |>

left_join(sell_prices, by = c("wm_yr_wk", "store_id", "item_id")) |>

replace_na(list(sell_price = 0))Note that in normal use, it would be better to store each file in a different table and join with Arrow or DuckDB, but the goal here is to create a large dataframe.

In addition the raw data takes about 8 GB which technically still fits in memory on my laptop, but allows me to store the different formats on my disk, while measuring the performance differences.

Size of different file formats

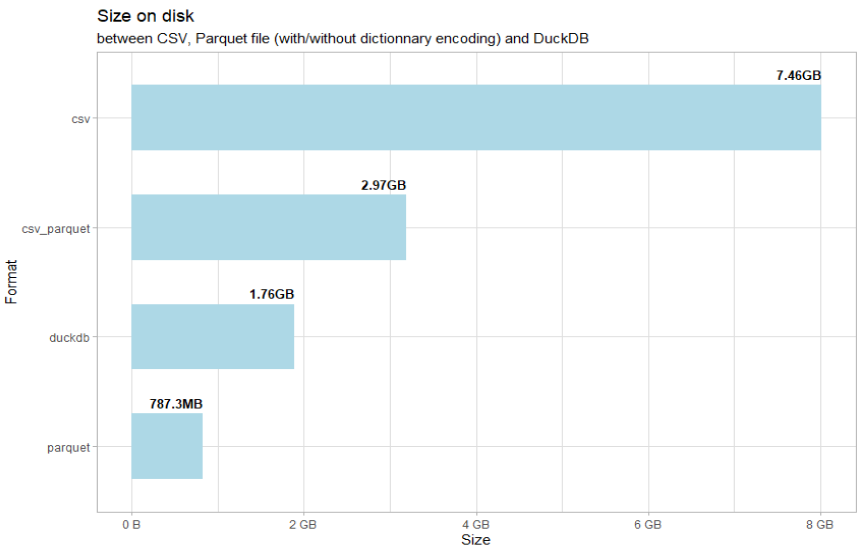

We will create 4 different formats, csv, Parquet created from a csv (to illustrate an important point), Parquet created from a dataframe and DuckDB.

Backup in csv format:

write_csv(sales, here::here("data", "sales.csv"))Write in Parquet format from a csv file using Arrow:

sales_csv_arrow <- open_dataset(here::here("storage", "sales.csv"), format = "csv")

write_dataset(sales_csv_arrow,

here::here("storage", "sales_csv_parquet"),

format = "parquet",

partitioning = c("year", "month"))Write in Parquet format with partitioning from the dataframe:

write_dataset(sales,

here::here("storage", "sales_parquet"),

format = "parquet",

partitioning = c("year", "month"))Creation of the DuckDB database from the partitioned Parquet format:

sales_arrow <- open_dataset(here::here("storage", "sales_parquet"))

con <- dbConnect(duckdb::duckdb(), dbdir=here::here("storage", "sales_duckdb", "sales.duckdb"), read_only=FALSE)

arrow::to_duckdb(sales_arrow, table_name = "sales", con = con)

dbSendQuery(con, "CREATE TABLE daily_sales AS SELECT * FROM sales")Let’s compare the sizes on disk:

disk_size <- dir_info(here::here("storage"), recurse = TRUE) |>

mutate(relative_path = str_remove(path, here::here("storage")),

format = str_match(relative_path, "/(.+?)/")[,2],

format = str_remove(format, "sales_")) |>

arrange(format) |>

group_by(format) |>

summarise(total = sum(size)) |>

ungroup() |>

drop_na() |>

mutate(format = forcats::fct_reorder(format, total))

disk_size |>

ggplot(aes(format, total)) +

geom_col(fill = "lightblue", width=0.6)+

geom_text(aes(label = glue::glue("{total}B")), hjust = 1, nudge_x = 0.4, size = 3.5, fontface = "bold") +

coord_flip()+

scale_y_continuous(labels = scales::label_bytes(units = "auto_si", accuracy = 1))+

labs(title = "Size on disk",

subtitle ="between CSV, Parquet file (with/without dictionnary encoding) and DuckDB",

x = "Format",

y = "Size")+

theme_light()We see that the csv format takes almost 8 GB. The DuckDB format is almost 4 times smaller, and Parquet almost 10 times lighter.

Why is the Parquet format created directly from CSV larger?

Parquet uses dictionary encoding by default to represent strings. It creates a dictionary to keep the key (integer format) and value (string format) association, and thus only stores the data in the form of an integer much smaller than a character string.

Let’s take the example of the cat_id variable. It contains only 3 unique values (“HOBBIES”, “HOUSEHOLD”, “FOODS”) which are repeated 59 million times. Rather than storing 59 million strings, Parquet uses a dictionary to associate each string with an integer (0=“HOBBIES”, 1=“HOUSEHOLD”, 2=“FOODS”), and only stores the value of the integer.

In the case of creating the Parquet file from the in-memory dataframe, Arrow is able to find the unique values to create the dictionary, and therefore uses dictionary encoding.

In the case of a creation from the csv file, it is not possible to know the number of unique values before having processed the entire csv file, it therefore uses the simplest format which is to store the strings as strings.

This is transparent for the user, if we look at the schema, the type of the cat_id variable is always “string”:

sales_arrow$schemaSchema

id: string

item_id: string

dept_id: string

cat_id: string

store_id: string

state_id: string

d: string

qty: double

date: date32[day]

wm_yr_wk: double

weekday: string

wday: double

event_name_1: string

event_type_1: string

event_name_2: string

event_type_2: string

snap_CA: double

snap_TX: double

snap_WI: double

sell_price: double

year: int32

month: int32Note that there is also a dictionary type. For example, if we use factors, which is the case for the species, island and sex variables of the penguins dataset, we see that the factors are stored with the data type dictionary.

arrow_table(penguins)Table

344 rows x 8 columns

$species <dictionary<values=string, indices=int8>>

$island <dictionary<values=string, indices=int8>>

$bill_length_mm <double>

$bill_depth_mm <double>

$flipper_length_mm <int32>

$body_mass_g <int32>

$sex <dictionary<values=string, indices=int8>>

$year <int32>I won’t go into the type, schema, etc. aspects here any further, but it’s important to be aware of them for at least two reasons:

- Use the most appropriate type: for example if an integer only takes a value between 0 and 255, use int8 rather than int32. Python users are already aware of this type of conversion, but this is less the case with R, with which we just use integer, double without any notion of int8, int16, int32 or float16, float32, float64.

- Join: The join between two files will fail if the data types used for the join are different, it is then necessary to convert one of the two variables so that they have the same type.

Query Performance

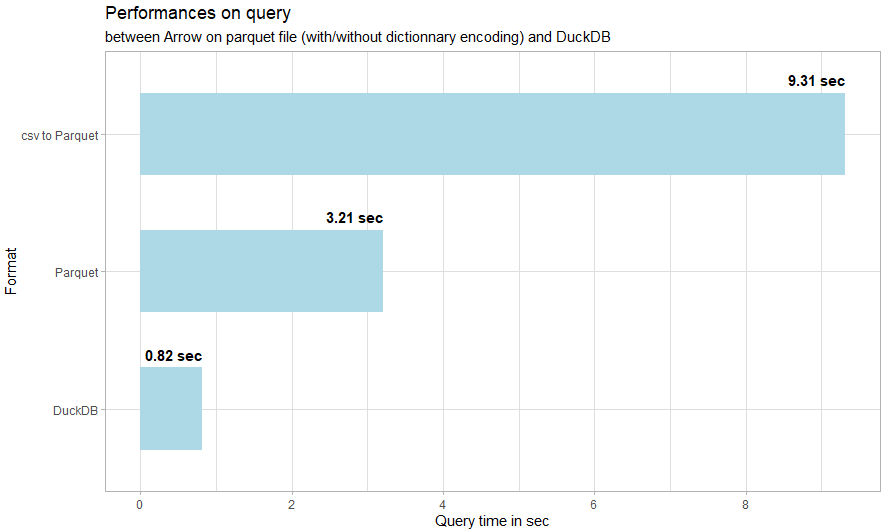

We are going to compare the performances on a request allowing to calculate the monthly incomes by category and by state, for the year 2015. It allows to have at the same time a filter, a group by, a calculation, and a sorting.

For the CSV file, I separated the time for querying and reading the data:

tic()

sales <- read_csv(here::here("storage", "sales_csv", "sales.csv"))

toc()

tic()

sales |>

filter(year==2015) |>

group_by(state_id, cat_id, month) |>

summarise(monthly_revenue = sum(qty * sell_price, na.rm = TRUE)) |>

ungroup() |>

arrange(cat_id, month)

toc()2258.86 sec elapsed

44.07 sec elapsed

Creating this query on a csv file takes a total of 2302.93 seconds, more than 38 minutes, including 37 for reading the data, and 44 for the query once the data is loaded into memory.

For parquet without dictionary encoding:

tic()

sales_arrow <- open_dataset(here::here("storage", "sales_csv_parquet"))

sales_arrow |>

filter(year==2015) |>

group_by(state_id, cat_id, month) |>

summarise(monthly_revenue = sum(qty * sell_price, na.rm = TRUE)) |>

ungroup() |>

arrange(cat_id, month) |>

collect()

toc()9.31 sec elapsed

For parquet with dictionary encoding:

tic()

sales_arrow <- open_dataset(here::here("storage", "sales_parquet"))

sales_arrow |>

filter(year==2015) |>

group_by(state_id, cat_id, month) |>

summarise(monthly_revenue = sum(qty * sell_price, na.rm = TRUE)) |>

ungroup() |>

arrange(state_id,cat_id, month) |>

collect()

toc()3.21 sec elapsed

And finally DuckDB :

tic()

con <- dbConnect(duckdb::duckdb(), dbdir=here::here("storage", "sales_duckdb", "sales.duckdb"), read_only=TRUE)

dbListTables(con)

dbGetQuery(con, "SELECT state_id, cat_id, month, SUM(qty * sell_price) FROM daily_sales WHERE year = 2015 GROUP BY state_id, cat_id, month ORDER BY state_id, cat_id, month")

duckdb::dbDisconnect(con)

toc()0.82 sec elapsed

We can clearly see that the csv format is very far in terms of performance, with 2303 seconds, or more than 38 minutes. I therefore exclude it from the visualization to be able to more easily visualize the differences between parquet_csv, parquet and duckdb:

performance <- tibble(format = c("csv", "csv_parquet", "parquet", "duckdb"),

format_text = c("csv", "csv to Parquet", "Parquet", "DuckDB"),

elapse_time = c(2302.93, 9.31, 3.21, 0.82))

performance |>

filter(format != "csv") |>

mutate(format_text = forcats::fct_reorder(format_text, elapse_time)) |>

ggplot(aes(format_text, elapse_time))+

geom_col(fill = "lightblue", width=0.6)+

geom_text(aes(label = glue::glue("{elapse_time} sec")), hjust = 1, nudge_x = 0.4, size = 4, fontface = "bold") +

coord_flip()+

scale_y_continuous(breaks=seq(0,10,2)) +

labs(title = "Performances on query",

subtitle ="between Arrow on parquet file (with/without dictionnary encoding) and DuckDB",

x = "Format",

y = "Query time in sec")+

theme_light()Regarding performance, parquet is 717 times faster than the same query on a csv file, and duckdb is 2808 times faster.

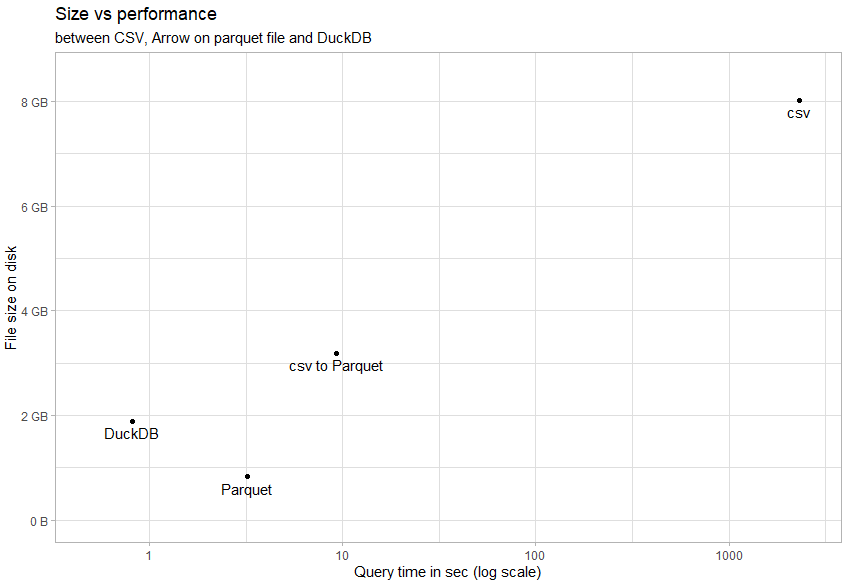

DuckDB is 4 times faster than the query on a parquet file. Keep in mind that there is a compromise to be made since the parquet file is more compressed and takes up 2.5 times less space than the base in duckdb format.

We can visualize a summary with the trade-off size / performance (logarithmic scale):

disk_size |>

left_join(performance) |>

ggplot()+

geom_point(aes(elapse_time, total)) +

geom_text(aes(elapse_time, total, label = format_text), nudge_y = -1e8, vjust = 1) +

scale_x_log10(limits=c(0.5,2500))+

scale_y_continuous(labels = scales::label_bytes(units = "auto_si", accuracy = 1), limits = c(0.5e9,8.5e9)) +

labs(title = "Size vs performance",

subtitle ="between CSV, Arrow on parquet file and DuckDB",

x = "Query time in sec (log scale)",

y = "File size on disk")+

theme_light()

Memory usage

We have seen at the performance level that for the case of a csv file, all the data is loaded into memory, whereas for Arrow and DuckDB, only the result is returned.

We can also be sure by looking at the size of the objects:

CSV file loaded in memory:

str_obj(sales)10.42 GB

Using Arrow:

str_obj(sales_arrow)261.59 kB

When executing the query on the data from the csv file, the memory used by R on my laptop exceeded 13 GB (dataframe size + query), while it remained below 1 GB for the query with Arrow.

Conclusion

We saw how to use Apache Arrow and DuckDB for common manipulations, switch from one engine to another, from one format to another, use dplyr or SQL, and finally see the benefits in terms of storage, performance for queries, without loading the data into memory in R.

We therefore have all the elements to define the most suitable architecture for collecting and analyzing voluminous data.

Summarizing the benefits:

- Interoperability between Arrow, DuckDB and dplyr

- Analysis of data that does not fit in memory

- Compressed formats lighter in place than traditional CSV

- Query time performance

- Cross-platform and multi-language, you can use the DuckDB dataset or database created with R on Windows without problems with Python or Julia on Linux

I used Apache Arrow and DuckDB together to manipulate large datasets. However, it should be noted that DuckDB is an OLAP database management system that provides more functionality than Apache Arrow to build an analytical solution.

Note: I only focused on the Parquet format so as not to overload the post, but Apache Arrow also uses the Feather format, which is lighter, but less compressed.